I merged all my home servers together to improve performance while reducing power usage.

Preface

In one of my first ever posts, I shared my all-in-one server which has since been split into a separate application server running pure Docker containers and a 72TB FreeNAS storage server.

There was an imbalance however. The storage server was way more powerful than the application server yet acted as an archive and used infrequently. It didn't need its 128GB of RAM and Intel Xeon E5 CPU for that. If I swapped the hardware out with the application server, the combination of 32GB of RAM and 72TB of raw disks would have made FreeNAS/ZFS unstable. I decided the best action was to combine my application and storage server together.

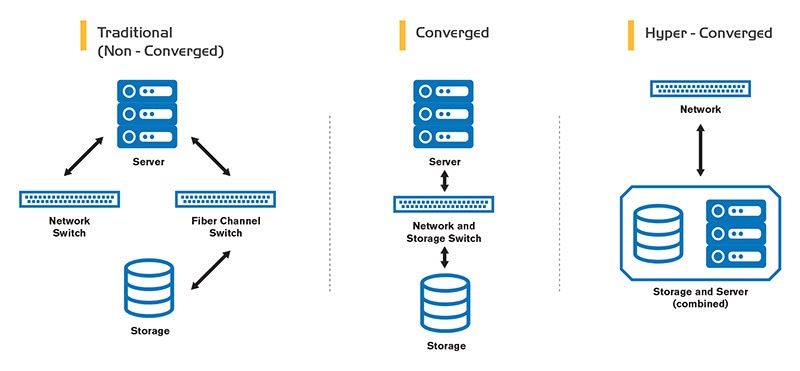

The act of merging application, storage and network devices is known as hyper-converged computing This excellent diagram below from helixstorm outlines the different types of infrastructures possible.

To achieve this I needed to:

- Get more drives into my already cramped server

- Run ZFS on Linux instead of FreeNAS. Docker containers aren't supported on FreeNAS

- Enable monitoring for ZFS on Linux

Adding more drives

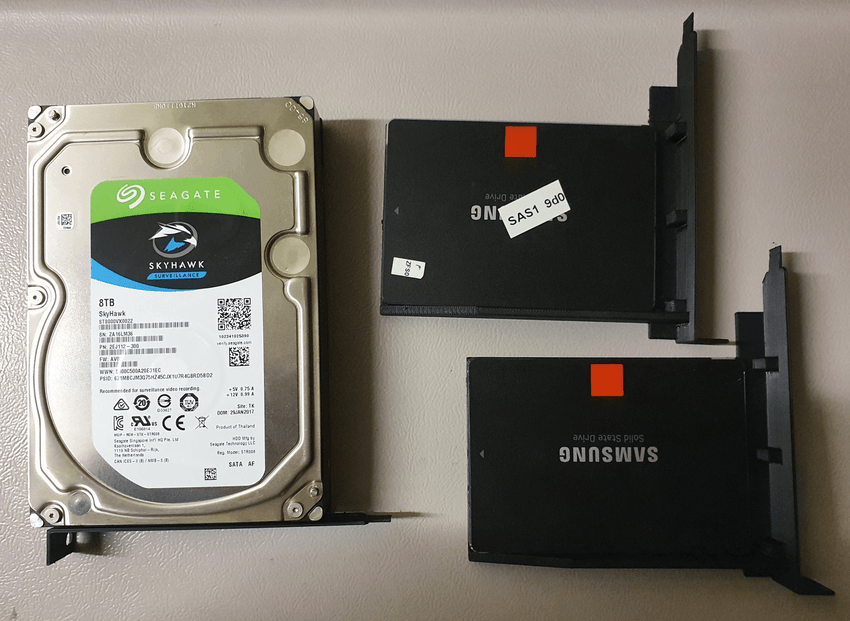

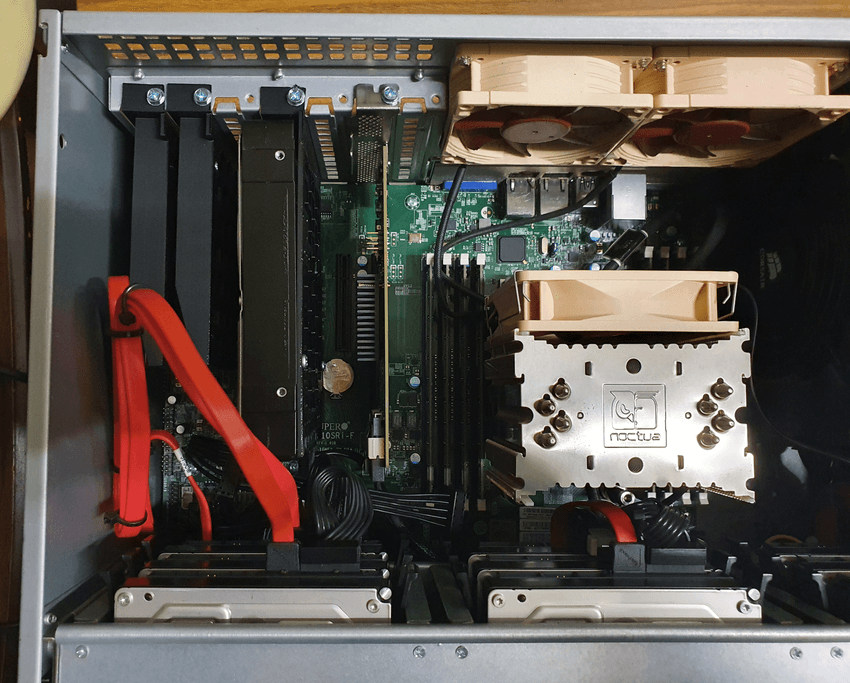

My Norco RPC-431 only supports 9x3.5" drives and 1x2.5" drive. I needed to add and extra 1x3.5" and 2x2.5" drives used for my security cameras and the operating system. My Supermicro X10SRi-F motherboard also has only 10 SATA ports. I had to get a bit creative.

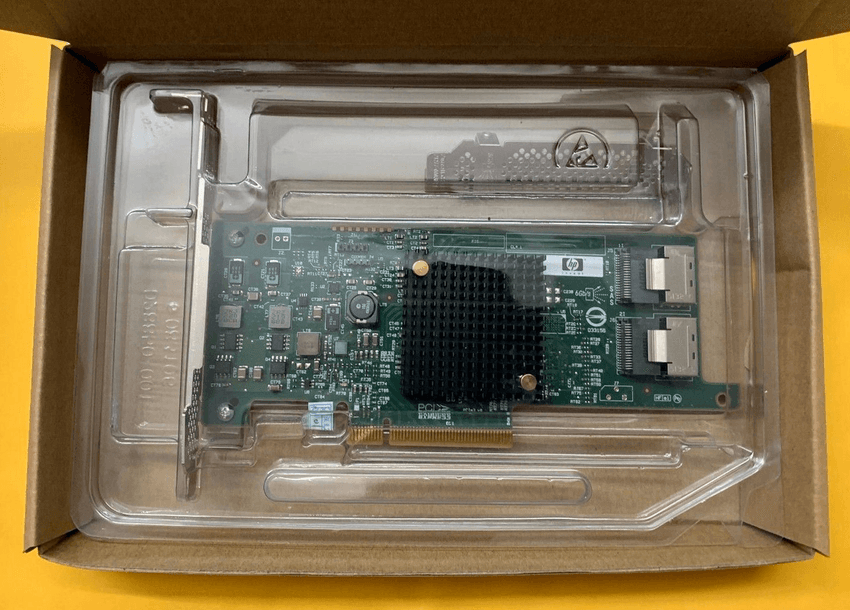

HP H220 (LSI SAS2308)

Adding more SATA ports is an easy task with a RAID card running in IT mode. Thanks to the ServeTheHome forums, I was able to go through all a list of all models and their OEM counterparts.

I decided I wanted a SAS2308 or newer card as they support SATA3 (6Gb/s) speeds for my SSDs. Searching each one on eBay, I found the HP H220 for around AUD $55.

I also grabbed two cables mini-SAS (SFF-8087) to SATA cables from AliExpress for around AUD $8.

When I received the card, I performed a firmware upgrade following this guide from tifan.net. Since the files there may one day disappear, I've uploaded the PH20.00.07.00-IT.zip firmware and P14 sas2flash.efi tool on my site too. Here are the EFI shell commands:

$ sas2flash.efi -o -e 7

$ sas2flash.efi -f 2308T207.ROM

$ sas2flash.efi -b mptsas2.rom

$ sas2flash.efi -b x64sas2.rom

$ sas2flash.efi -o -sasaddhi <first 7 digits of SN>3D Printed PCI Drive Mounts

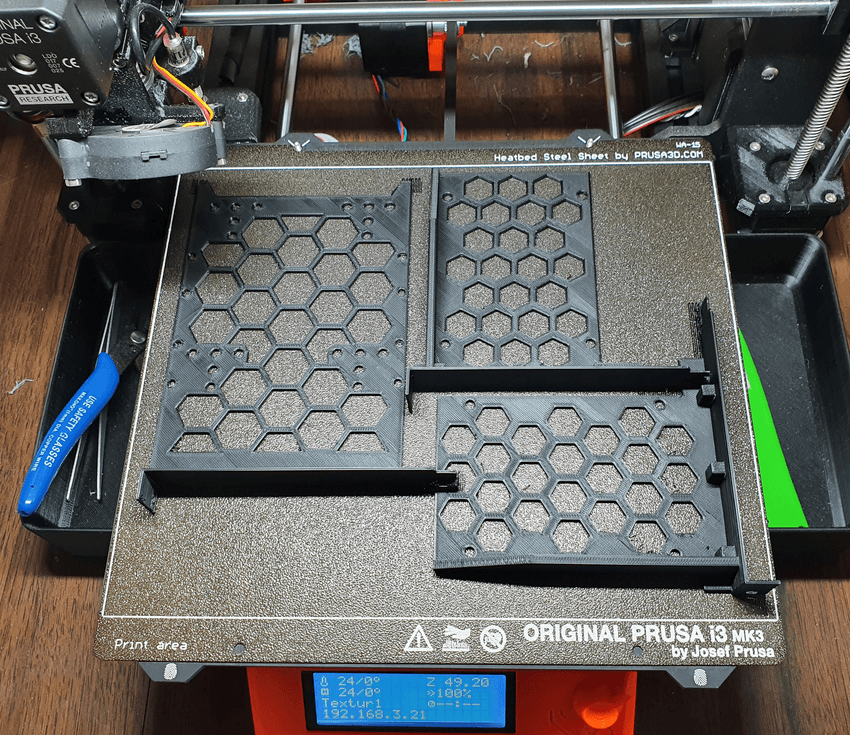

The only free space left in the case were where the PCI slots were. I found a some 3D prints off Thingiverse for hard drive PCI mounts:

I was able to squeeze one 3.5" and two 2.5" together on my 3D printer.

I mounted them with normal screws.

With the drives and RAID card installed into the system, before wiring up the spaghetti:

I re-installed Ubuntu from scratch to be safe. I was able to follow my previous post on setting up RAID1 for 18.04 on 20.04 with some small wording changes.

ZFS on Linux

Importing the ZFS ZPool in Linux

First I installed the zfs package:

$ apt install zfsutils-linuxRunning zpool import lists all the possible pools that can be imported:

$ zpool import

pool: files

id: 11082528084113358057

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and the '-f' flag. see: http://zfsonlinux.org/msg/ZFS-8000-EY

config:

files ONLINE

raidz2-0 ONLINE

sdi ONLINE

sdk ONLINE

sdj ONLINE

sdd ONLINE

sda ONLINE

sdm ONLINE

sdl ONLINE

sdc ONLINE

sdb ONLINEI imported the pool with the special -d /dev/disk/by-id option so it auto-mounts after reboots:

$ zpool import -f -d /dev/disk/by-id filesI also performed an upgrade to get the latest features:

$ zpool upgrade files

This system supports ZFS pool feature flags.

Enabled the following features on 'files':

large_dnode

edonr

userobj_accounting

encryption

project_quota

allocation_classes

resilver_defer

bookmark_v2Also changed the mountpoint as it mounted to /files by default:

$ zfs get mountpoint files

NAME PROPERTY VALUE SOURCE

files mountpoint /mnt/files local

$ mkdir /mnt/files

$ zfs set mountpoint=/mnt/files filesSetting up SMB (Samba)

FreeNAS made sharing zpools easy with GUI options for SMB/CIFS, FTP, etc. I had to set up SMB myself and you can find it in my Ansible playbook. The only thing it doesn't do is set smbpasswd which isn't possible with Ansible yet.

$ sudo smbpasswd -a calvin

New SMB password:

Retype new SMB password:

added user calvinIf you're using Nextcloud like me, I updated the External Storage app to use a local mountpoint instead of SMB.

Monitoring

FreeNAS provided alerts ZFS and disk health events. To replicate this as close as possible, I used different tools. You can these in my Ansible ZFS playbook.

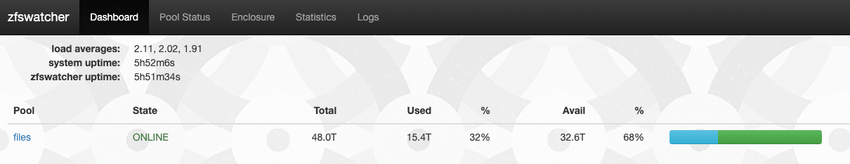

zfswatcher

zfswatcher is a ZFS pool monitoring and notification daemon. A Docker image is provided by Pectojin. It is able to send email alerts on a variety of ZFS events.

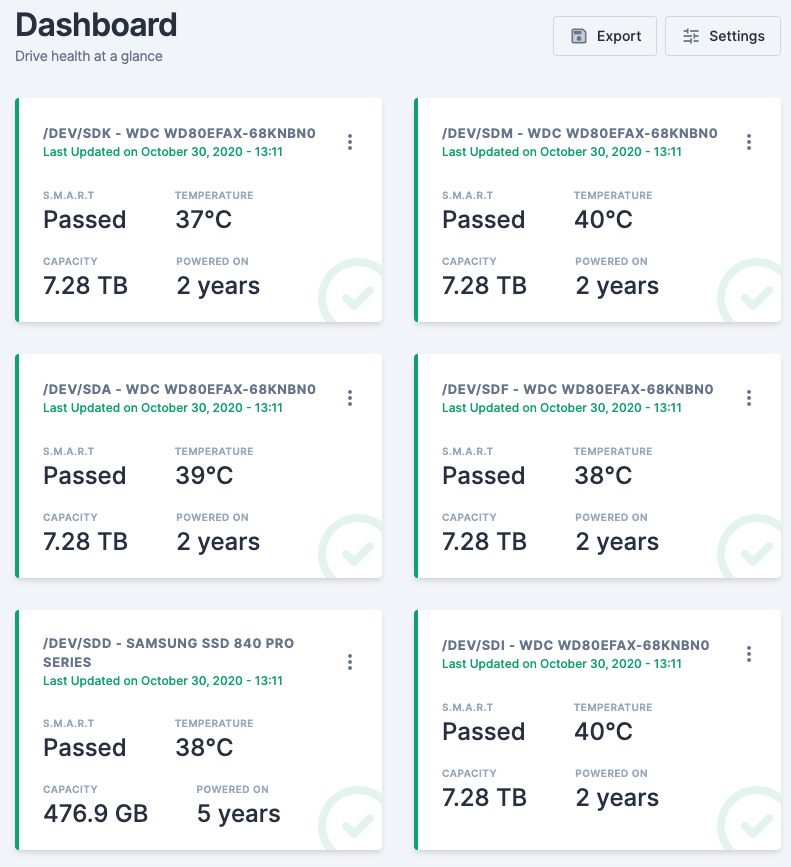

scrutiny

scrutiny provides a web interface for drive S.M.A.R.T. monitoring. It is also able to send alerts to a lot of services with its shoutrrr implementation

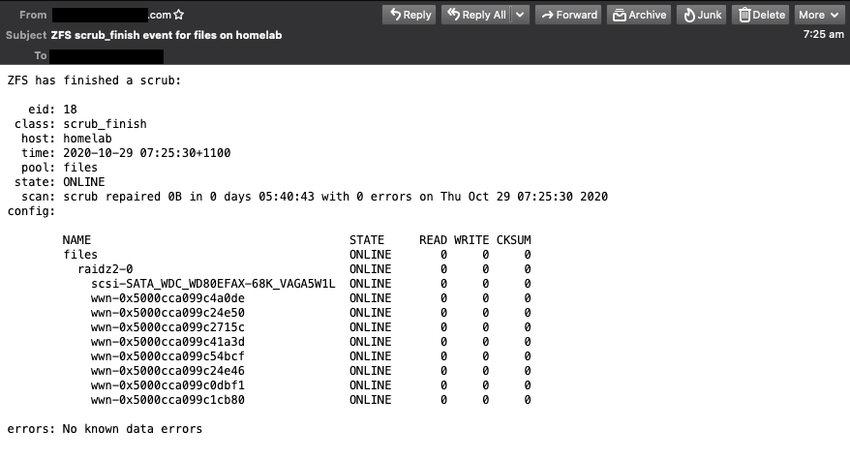

ZFS ZED

zed is the built-in ZFS on Linux Event Daemon that monitors events generated by the ZFS kernel. Whenever a new ZFS event occurs, ZED will run any ZEDLETs (ZFS Event Daemon Linkage for Executable Tasks) created under /etc/zfs/zed.d/. I didn't add any new ZEDLETs and went with the default provided.

A mail provider is required on the host as well. I went with msmtp as ssmtp was no longer maintained and postfix looked too heavy for simple emails. I added all the email configs to the ZED config file at /etc/zfs/zed.d/zed.rc and tested with a zpool scrub.